Update 29 Feb 2020.

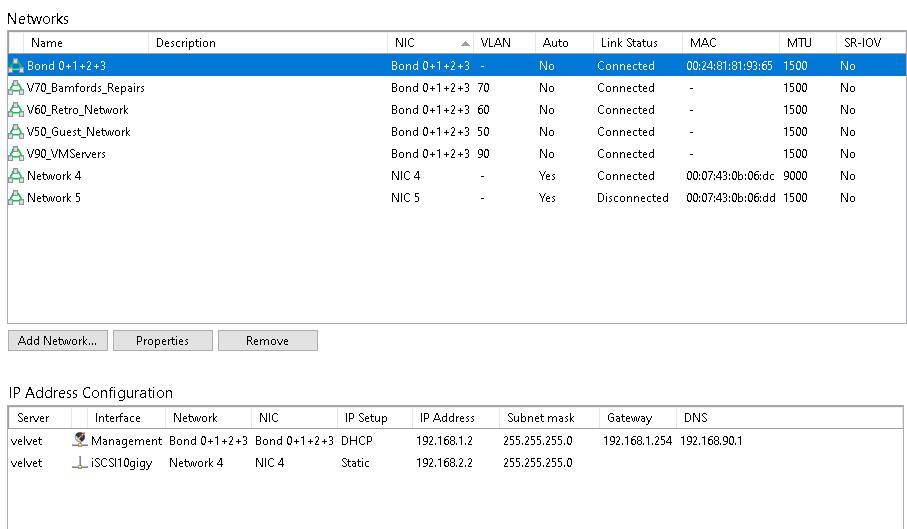

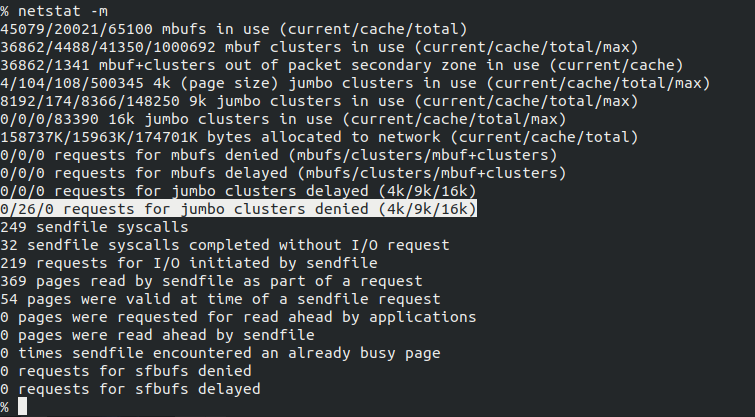

There is a issue with Jumbo Frames set to 9000 when using iSCSI doesn’t seem to affect NFS/SMB. I recommend not using MTU9000 instead use MTU1500 instead. Known Symptoms Link locks up no packets are received or sent High CPU Usage of 100% netstat -m shows the following in FreeNAS. This problem doesn’t seem to affect Debian/Ubuntu. More information can be found here https://forums.freenas.org/index.php?threads/10-gig-networking-primer.25749/

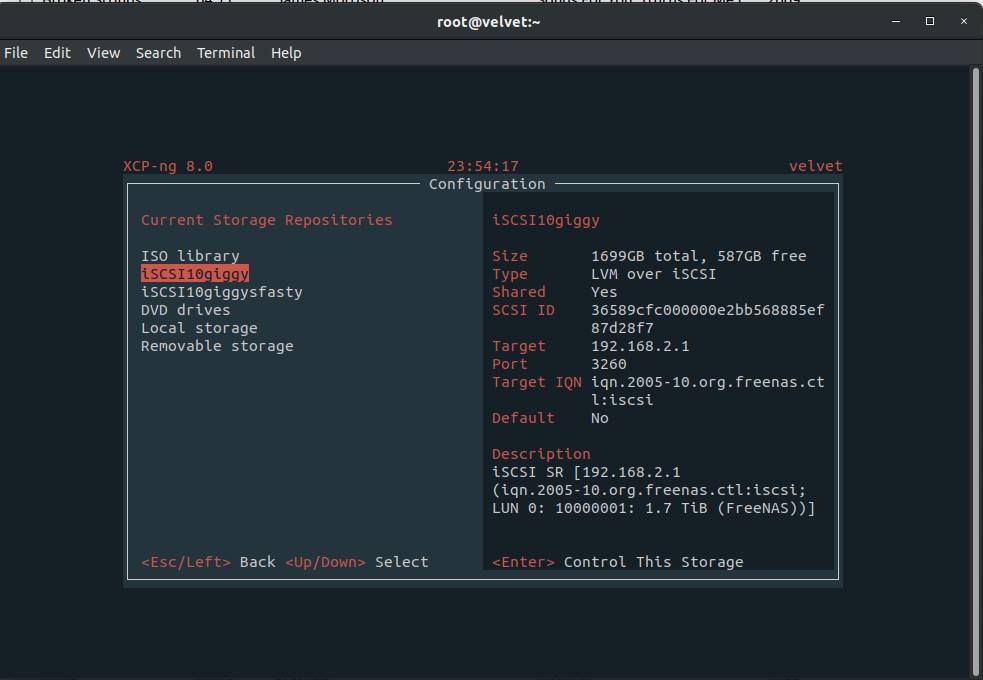

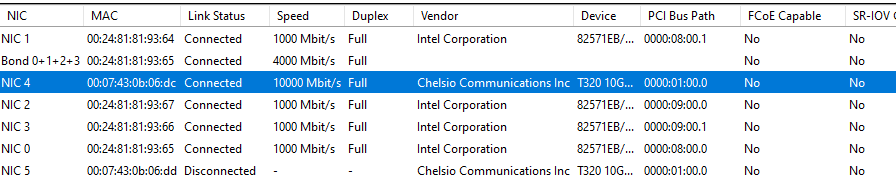

So ive been using a 4gbp LAG for iSCSI as well as the main network on the same LAG interface and i wanted too move the iSCSI traffic to its own network because the speed of the VMs and network can drop when there is a lot of VMs and traffic, I could of bought another 4gbp NIC but I decided to take the plunge and upgrade to 10Gig so I bought 3 Chelsio T320 cards which i paid £23 for on eBay. These cards seems to be popular in the forums. These cards are Dual Port Cards there is one spare Port on Velvet so could setup a Ha with another XCP-ng Server.

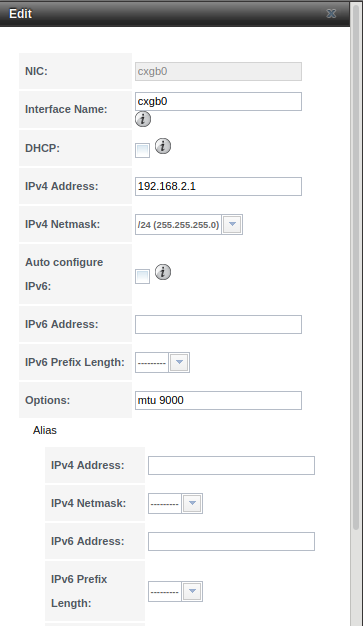

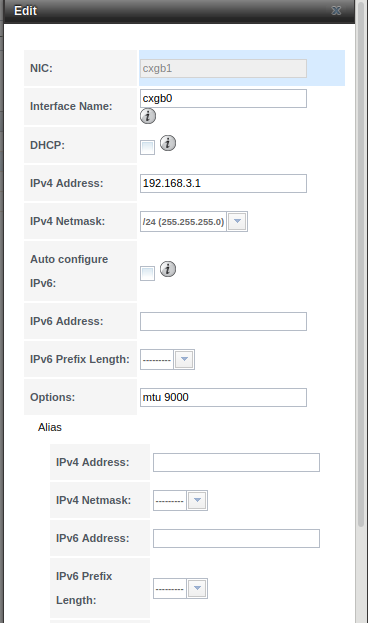

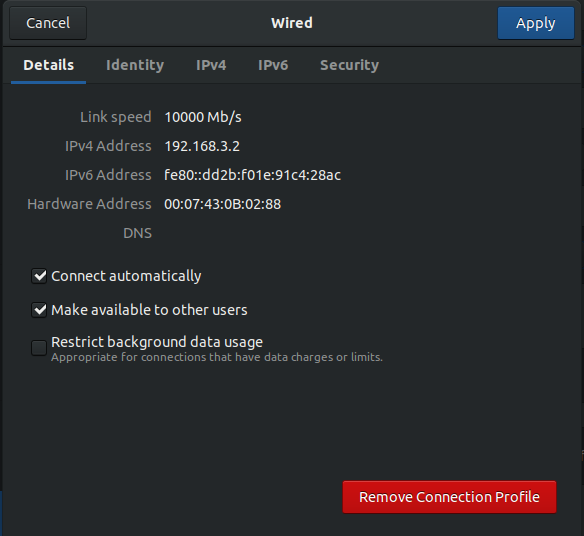

I tested these Cards in FreeNAS, XCP-ng and Ubuntu all three OS’s seen the cards out of the box which is great as i had 2 motherboards i tested these cards on with a Link only using a SFP+ DAC Cable (Direct Attached Copper). So in my testing i didn’t have any issues, i set MTU (maximum transmission unit) too 9000 on both machine this allows for much more packets to be sent and received (RX and TX) as there are many VMs and loads of Disk IO i wanted to tweak the 10gig network as much as I could. I have not tested the cards in Windows 10 but works in Windows 7.

The SFP+ DAC (Direct Attached Copper) has a Cisco Part Number on and as the FreeNAS, XCP-ng and my workstation is next to each other in the rack I went with Direct Attached Copper instead of a SFP+ Fibre Transceivers along with Fibre cabling its more expensive than the DAC Cable, if I were going long distance then I’d choose Fibre Transceivers and Fibre Cabling instead of Copper you will have much lower latency with DAC and much higher latency with Fibre. The reason why I’m using link only instead of a 10Gbe switch is because the 10gig switches are so expensive unlike a 1gbp switch.

I installed one of these cards in my workstation as i do everything over NFS (Network File Sharing) in Linux to Telsa which is the Storage Server I have also set MTU to 9000 on my workstation I dont see the full 10gbe speeds as the Disks in Telsa are Slower i get around 500mbs to 550mbs on a NFS Transfer which is an improvement as i had 150 to 200mbps on a 2gbp LAG on my workstation.

Upgrading to 10gig Network as improved the 4gbp LAG well of course it would right? on all of my VM Servers that runs in a VLANs i can saturate a 1gbp link very easy which I couldn’t when iSCSI was running on the same LAG interface.

Overall the Upgrade was worth it but i still want to upgrade to a 10Gbe switch for the 10gig network sometime but will wait until the price comes down.

So here are some pictures of the initial testing.